BLOOMZ

No description provided.

BigScience Workshop

Multitask Fine-tuned Language Model

Tracking models and their artifacts in a systematic, versionable, and open source repository.

Model cards and system cards are considered the gold standard for pro- viding model-to-model comparisons between various foundation models. These tools enable good documentation for the system under question but often fall short of tracing the model genealogy, machine readability, providing reliable centralized management systems and fostering incentives for their creation. For example, tracing the provenance of foundation models and their derivatives is essential to managing licensing and legislative risks in the AI ecosystem, as it allows researchers to understand a model’s entire history and dependencies.

It is also well known that such documents like model cards on HuggingFace are inconsistently produced in non-machine-readable formats, often with erroneous information or missing details.

We argue these are symptoms of a deeper issue - that creating model cards or system cards is a manual process where human effort is duplicated and error-prone, notably when a new model inherits most of its design from a foundation model.

To close this gap, we propose a machine-readable model specification format which can flexibly capture model information. Importantly, this format explicitly delineates the relationships between upstream and downstream models, as well as their associated data. To facilitate the adoption, we have released the Unified Model Record (UMR) repository, which serves as a semantically versioned repository of model specifications, enabling better traceability and transparency in the AI, while being user-friendly, thereby motivating machine learning practitioners to maintain documentation.

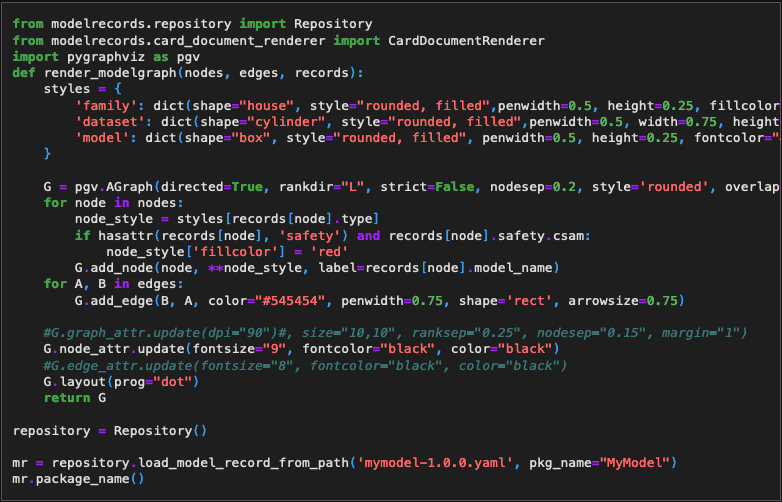

The tool can be used to generate the following outputs: LaTeX, PDF, HTML and GraphViz

git clone git@github.com:modelrecords/exampleusage.git

conda env create -f environment.yml

conda activate modelrecords_exampleusage

Filter by model family

No description provided.

BigScience Workshop

Multitask Fine-tuned Language Model

This is the 70B variant.

Meta

Large Language Model

No description provided.

No publisher provided

No model type provided